February 7, 2025

Disinformation-as-a-Service

The cybercrime epidemic destabilizing the world

Disinformation-as-a-service (DaaS) is emerging as a powerful and lucrative industry that is quickly becoming one of the most dangerous forms of cybercrime. Powered by AI and funded largely by adversarial nation-states like Russia, this new form of digital warfare is transforming the way misinformation is spread, and enhancing its potential for widespread societal disruption.

Disinformation as a profitable business

The concept of "disinformation-as-a-service" marks a disturbing shift in the cybercrime landscape. Unlike traditional hacking, which targets data or systems, DaaS criminals create and distribute false narratives at the behest of any actor with a political or financial interest in spreading them.

What makes DaaS particularly effective is the use of AI to not only optimize content for viral success but also tailor it to highly specific demographics. Cybercriminals can create fake news, viral videos, and misleading posts designed to resonate with targeted groups, all while ensuring this content performs well across platforms like Facebook, Twitter, and Instagram. By using AI to optimize for social media algorithms, these campaigns reach millions of users, who often don't realize they’re being manipulated.

Deepfake technology and copyright issues

The proliferation of deepfake technology, where fake videos or images are created using real likenesses, has made it easier for cybercriminals to create highly convincing content that can be used to manipulate public opinion. For example, disinformation campaigns can feature fabricated videos of politicians making inflammatory statements or public figures endorsing harmful products. As deepfake technology becomes more advanced, it will become increasingly difficult to tell what is real and what is fake, further complicating efforts to curb the spread of disinformation.

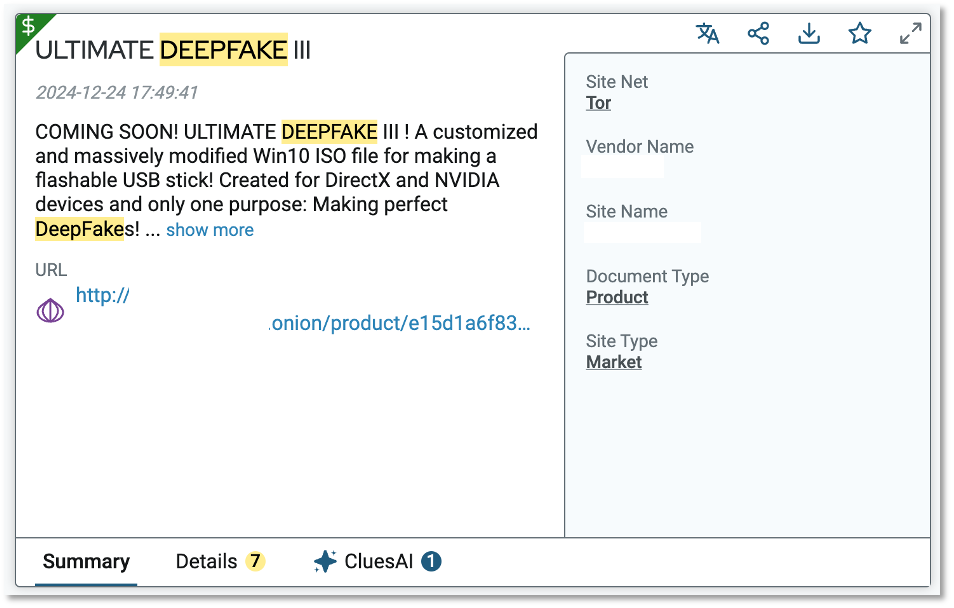

Above: A site summary in DarkBlue, showing data from a dark web advertisement for deepfake software.

Recently, YouTube announced new guidelines aimed at addressing the growing issue of deepfakes, particularly those that use the images of celebrities and public figures without consent. This policy, which allows individuals to claim their likenesses as intellectual property, is similar to the copyright protections afforded to music and other creative works. Many people are beginning to claim copyrights on their digital likenesses. This shift in intellectual property rights could serve as a safeguard against the growing misuse of personal images in disinformation campaigns.

Russia's role in the disinformation ecosystem

Russia has long been linked to disinformation campaigns, particularly through state-sponsored activities aimed at sowing division and confusion in western countries. But with the rise of disinformation-as-a-service, Russian cybercriminals have found new ways to monetize this strategy. By offering disinformation as a paid service, they are not only contributing to the destabilization of political systems but also profiting from the growing demand for targeted misinformation.

The broader goal of these disinformation campaigns is to create an environment where people no longer trust the information they receive, making it harder for individuals to discern fact from fiction. The result is a fragmented and polarized public, more susceptible to manipulation by both state actors and private interests.

In particular, Russia has been linked to disinformation campaigns aimed at influencing elections, fostering distrust in government institutions, and amplifying social and political divides in countries like the United States and Ukraine. As DaaS expands, these tactics are likely to become even more prevalent, potentially causing even greater instability in democratic processes.

Conclusion: combating disinformation with proactive intelligence

As DaaS rapidly evolves, it presents new and unprecedented challenges for governments, organizations, and societies at large. To effectively combat this growing cybercrime epidemic, dark web monitoring and advanced threat intelligence are essential.

CACI's DarkBlue Intelligence Suite provides the cutting-edge tools needed to track, analyze, and disrupt disinformation networks in real time. With DarkPursuit, our secure live access tool, and AI-driven analytics, DarkBlue helps you stay ahead of emerging threats, providing the intelligence necessary to protect your organization and your country from the devastating effects of disinformation campaigns.

Experience the full capabilities of the DarkBlue Intelligence Suite and gain the insights you need to identify and counter disinformation in its early stages. Request a free trial today and fortify your defenses against this growing global threat.

Want more insights from DarkBlue? Subscribe to our newsletter for blog posts, intel, webinars, and more.